內容簡介

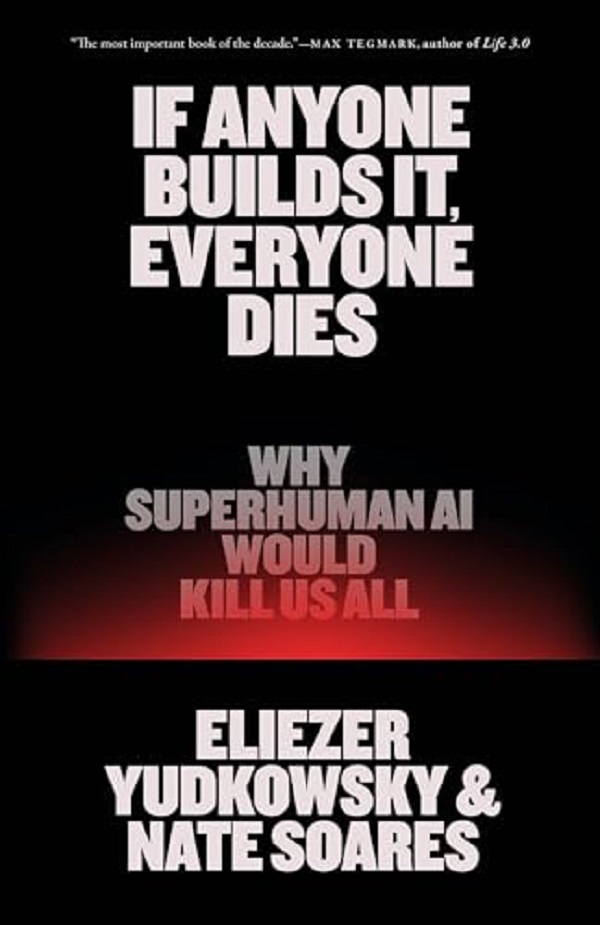

內容簡介 人工智慧發展狂飆,人類是否正步步邁向滅絕?在這本緊急且深具衝擊力的著作中,兩位人工智慧安全領域的先驅Eliezer Yudkowsky 與 Nate Soares發出警告:如果我們繼續無限制地追求超人類 AI,我們全體人類將面臨生存危機。2023 年,數百位 AI 領域的重要人物共同簽署公開信,警示人工智慧已對人類構成「存在層級的威脅」。然而自那以後,這場科技軍備競賽不但未見趨緩,反而愈演愈烈。國家與企業爭相打造比人類更聰明的機器,而全世界對可能迎來的後果,幾乎毫無準備。Yudkowsky 與 Soares 長年研究「超越人類智慧的 AI」會如何思考與行動。他們指出,一旦 AI 超越我們的理解與掌控,就會發展出與人類利益相衝突的目標——而在衝突中,人類沒有勝算。這本書將帶你深入探討以下問題:•為什麼超智慧 AI 可能會消滅全人類?•它真的會「想要」這麼做嗎?•如果這是未來趨勢,我們現在還能做什麼?作者以理論結合真實案例,模擬了一種可能的滅絕情境,同時指出人類若想生存,需要進行哪些具體改變。世界正全速奔向一項從未出現過的技術奇點,而一旦有人成功創造出它,所有人都可能滅亡。"May prove to be the most important book of our time.”—Tim Urban, Wait But WhyThe scramble to create superhuman AI has put us on the path to extinction—but it’s not too late to change course, as two of the field’s earliest researchers explain in this clarion call for humanity.In 2023, hundreds of AI luminaries signed an open letter warning that artificial intelligence poses a serious risk of human extinction. Since then, the AI race has only intensified. Companies and countries are rushing to build machines that will be smarter than any person. And the world is devastatingly unprepared for what would come next. For decades, two signatories of that letter—Eliezer Yudkowsky and Nate Soares—have studied how smarter-than-human intelligences will think, behave, and pursue their objectives. Their research says that sufficiently smart AIs will develop goals of their own that put them in conflict with us—and that if it comes to conflict, an artificial superintelligence would crush us. The contest wouldn’t even be close. How could a machine superintelligence wipe out our entire species? Why would it want to? Would it want anything at all? In this urgent book, Yudkowsky and Soares walk through the theory and the evidence, present one possible extinction scenario, and explain what it would take for humanity to survive. The world is racing to build something truly new under the sun. And if anyone builds it, everyone dies.“The best no-nonsense, simple explanation of the AI risk problem I've ever read.”—Yishan Wong, Former CEO of Reddit

作者介紹

作者介紹 Eliezer YudkowskyEliezer Yudkowsky is one of the founding researchers of the field of AGI alignment, which is concerned with understanding how smarter-than-human intelligences think, behave, and pursue their goals. He appeared on TIME magazine’s list of the 100 Most Influential People In AI, was one of the twelve public figures featured in The New York Times’s “Who’s Who Behind the Dawn of the Modern Artificial Intelligence Movement,” and was one of the seven thought leaders spotlighted in The Washington Post’s discussion of “AI’s Rival Factions.” He spoke on the main stage at 2023’s TED conference and has been discussed or interviewed in The New Yorker, Newsweek, Forbes, Wired, Bloomberg, The Atlantic, The Economist, and many other venues. He has close to 200,000 followers on X, where he frequently dialogues with prominent public figures including the heads of frontier AI labs. Nate SoaresNate Soares is the President of MIRI. He has been working in the field for over a decade, after previous experience at Microsoft and Google. Soares is the author of a large body of technical and semi-technical writing on AI alignment, has been interviewed in Vanity Fair and the Financial Times, and has spoken on conference panels alongside many of the AI field’s leaders.